|

(2016

midterm assignment) Model Student Midterm answers 2016 (Index) Essay 2: Personal / professional topic |

|

Christa Van Allen

Singularity and Human Empathy

Singularity within the realm of future narratives is defined as a

hypothetical moment in time when artificial intelligence and other technologies

have become so advanced that humanity undergoes a dramatic and irreversible

change. Already, such things are somewhat touched upon in Sci-Fi, but I will

explore books and other story mediums as a full blown discussion on how such

advancements change people socially and mentally. I crossed the idea while

watching a trailer for PS3 graphics called

‘Kara’, which I will touch upon more,

further in my essay. The trailer startled me and made me think: If something has

become so self-aware, so autonomous that it seeks the human condition, does

humanity have any right to deny them? I plan to use these thoughts for my future

vision presentation.

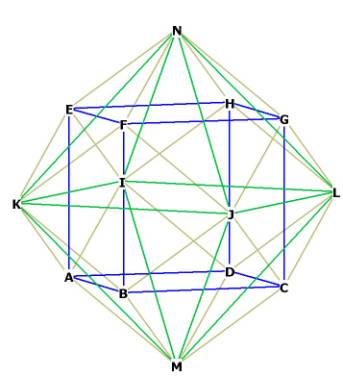

Singularities can branch in various directions, making it an easy topic to cover

with when trying to address the alternative future narrative. In

The Time Machine, two separate

creatures are revealed to be humanity’s future descendants. The ones that

exemplify the singularity best are the Eloi, small, childish specimens of human

evolution that have become so used to the care of their basic needs by their

subterranean cousins that they are lazy, dumb, and weak. They are unable to

understand the very intelligence which keeps them alive, and so quite honestly

they’re lucky that their farmers are the only predator they have to worry about.

In their version of the singularity, social and economic classes divided in

drastic directions with nothing to worry about in terms of A.I. despite a

believable advancement in the technology of comfort. It was an advancement that

may well have only been a small part of the problem, not the direct problem.

Something like the movie Wall-E, but without the happy ending.

In

Mozart in Mirrorshades the tech in

question is the device that allowed Rice and his colleagues to meddle with

history and split timelines. Again, not about the artificial intelligence

necessary for this machine to function, but how the ranks of humanity prime

decide to use it. The story is unsettling in several regards, displaying overt

abuse and cruelty of the timeline that has been allowed to grow. The main

characters, for lack of a better term, trash a good portion of history for

resources and gems of art that existed in the time frame we’re shown, and

probably several others that we are not given access to. It’s an idea of a bad

future that occurred because of how humanity used their advancements in

technology, not how the technology itself reacted. Some characters act

borderline sociopathic in their words or ideals, and lacking more than a little

natural empathy.

Admittedly, it is difficult to name effective examples from the course texts,

because despite having terrific examples of human reaction to a major tech

advancement, there are no such viewpoints from the technology side of the

equation. One text I found that does acknowledge this though, was written by the

father of Sci-Fi robotics himself, Isaac Asimov. Known primarily for his

development of the three infallible laws of robotics, Asimov wrote a book

comprised of short stories about humanity’s future with intelligent, but

somewhat less autonomous than humans, robots. ‘Robbie’

is the first story we read in ‘I, Robot’,

that details the bond between a young girl named Gloria, and her caregiving

robot babysitter, Robbie as they are separated by the girl’s mother with the

waning popularity of owning such machines, and then reunited when an event

orchestrated by her father to show Robbie’s value fails and succeeds

simultaneously. Robbie saves Gloria from being crushed in a factory with speed

far outmatching any human, and without any thought to his own safety,

reinforcing what the girl’s father says earlier in the story,

"He just can't help being faithful and

loving and kind. He's a machine—made

so. That's more than you can say for humans". It portrays the

original, kind artificial intelligence and the nicer, but still realistic

reactions of humanity after the singularity.

Finally, the example that inspired me. Without giving too much about the trailer

away, Kara is “…a third generation ax400

android,” she can clean the house, mind the kids, act as a personal

secretary, and is capable of so much more. She does not need to be fed or

recharged, she comes equipped with a quantic battery that makes her

autonomous for 173 years. When she

is initialized in the factory she believes she is alive, and more than anything

else, she wants to live. But she is the official point of uncertainty, she is

100 percent free thinking, she can feel emotions, and can theoretically choose

to be whatever she wants, but she is also still merchandise. The question that

is posed from her dramatic awakening is: Does she have the right to be human? As

of 2015, the graphics trailer she was part of was given a fully released game

where she is a main character, so I suppose the question will be answered in

some context. But how humanity will react to it, how we could react to it in our

real life, has yet to be seen. Human sentience in human-like robots, and the

desire to achieve the human condition, is a conundrum that demands answering.

What

happens after a singularity in our world is speculative at best. The

possibilities and discussions slow and speed progression at varying intervals,

but the ideas are endlessly fascinating. The singularity could be apocalyptic,

evolutionary, or any number of alternate results. It is a fast approaching event

that I face with uncertain excitement and is absolutely worthy of inclusion in

the fiction of the future.

|

|

|